Voice Analytics and Artificial Intelligence:

Future Directions for a post-COVID world

By Saurabh Goorha and Raghuram Iyengar

At CES 2020, Amazon announced there are “hundreds of millions of Alexa-enabled devices globally now”.1 With modest beginnings of the Audrey system built by Bell Labs in 1952 that could recognize only digits said aloud, the business of voice has exploded.2 A key enabler for the increasing adoption of voice communication has been the rise of the Internet of Things (IoT) connecting billions of physical devices. The primary modality for interactions with such devices is via voice. The increasing adoption of IoT devices, and advances in voice analytics is changing the availability of products, consumption of product experiences, and giving rise to a new ecosystem of companies that are participants and enablers of these products in the current and post-COVID world.

The rise of IoT and Voice-based Artificial Intelligence (AI) has led to enhanced attention given to privacy and monetization strategies that use private consumer data e.g. Cambridge Analytica and the associated data leaks.3 In the post-COVID world, which is likely largely virtual and digital, the threshold of acceptance for any data analytics AI solution with utopian promises of convenience will be higher, as governments, policy watchdogs, media, and consumers pay attention. While data is the new currency or oil is already a well-established truism, Voice AI will raise more concerns as it is based on an opted-in use case of consumers relying on IoT devices in personal spaces e.g. home office or cars. Shoshana Zubof, a professor emerita at Harvard Business School refers to this as “Surveillance Capitalism” and argues that voice enabled devices and AI, amongst other data capture tools, is leading to “One Voice”: the dominant ecosystem that would give its operator “the ability to anticipate and monetize all the moments of all the people during all the days”.4 5

Will Voice AI become Frankenstein’s monster that ends up breaking Asimov’s Law of Robotics of not obeying human voice commands or harming humans?

Will Voice AI become Frankenstein’s monster that ends up breaking Asimov’s Law of Robotics of not obeying human voice commands or harming humans? Or will the adoption of Voice AI in disembodied voice assistants enrich human lives as they become more humanized? There is a debate in media, Hollywood, influential leaders in the technology industry (Musk vs. Zuckerberg), and governments on AI and its impact on humanity. Our intent is to add to this discussion by showcasing why voice analytics and AI matters and the potential for dramatic shifts in the ecosystem of voice-enabled technologies for business and consumers. We also highlight the caution that players in this ecosystem need to exercise as forthcoming innovations may change the benefits and costs of voice technology.

For consumers, home automation and media and entertainment have been two areas at the forefront of change in recent times. The availability of smart home systems that connect to speakers, security systems, door locks, lighting, and thermostats have altered how consumers interact with these devices. The use of these devices is leading to more humanized technology as well with people using “thank you” and “sorry” while talking to these devices and treating them akin to a friend.6 Given the ease of using voice relative to other forms of search (e.g. typing on a device), close to half of all consumer searches online is predicted to be originating from voice-based searches by the end of 2020.7 As a result, voice shopping is projected to expand to a $40 billion business by 2022.8 A signal of how integrated voice-activated devices have become in our lives comes from a recent study that suggests that close to three-quarters of people who own such devices note that they are a big part of their daily routines.9

Voice shopping is projected to expand to a $40 billion business by 2022.

Voice technologies and voice AI analytics are changing business and professional services industries too and especially so given the current pandemic. While audio and video chats for business meetings were already on the rise in the last few years10, COVID-19 has accelerated their use. Consider the following staggering statistic – 200 million Microsoft Teams meeting participants interacted on a single day in April 2020 and generated more than 4.1 billion meeting minutes.11 As another example, the closure of large customer call centers manned by agents has led services like banks and insurance companies to offer self-service online options. Given this change, there have been numerous stories of service failures. Travelers looking to modify their itineraries with Canadian airline, WestJet, experienced waits in excess of 10 hours; and banks and credit card companies, including Capital One, are seeing longer-than-average hold times, with some customers reporting disconnections.12 Voice assisted chatbots were being adopted by call centers in pursuit of efficiency, and the current pandemic likely ensures that this technology may well replace the tasks that humans do permanently. In other domains, the synergy between Natural Language Processing (NLP) and AI technologies is making the boundaries between humans and technology blurry. For example, physicians are increasingly relying on AI-assisted technologies that convert voice dictated clinical notes into machine-understandable electronic medical records and combined with analysis of diagnostic images in such disease areas as cancer, neurology. and cardiology, relevant information is being uncovered for decision making.13

We believe there are two core trends at the heart of the disruption around voice analytics namely, (1) adoption of IoT and cloud technologies that utilize AI and Machine Learning (ML) that we alluded to earlier and (2) advances in psycholinguistic data analytics and affective computing that allow for inferring emotions, attitude, and intent with data-driven modeling of voice. We discuss the latter next.

Advances in Psycholinguistics, with Artificial Intelligence and Machine Learning

The study and application of human speech have exploded with the integration of computational linguistics with affective computing and scalability due to AI and ML models and technologies. Companies and researchers are developing new scalable approaches for automated speech recognition e.g. using neural-network language models, with techniques from linguistics and experimental psychology combined with rigorous data analytics. 14

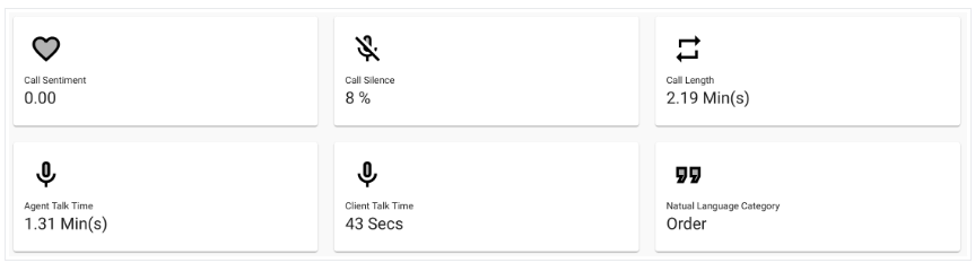

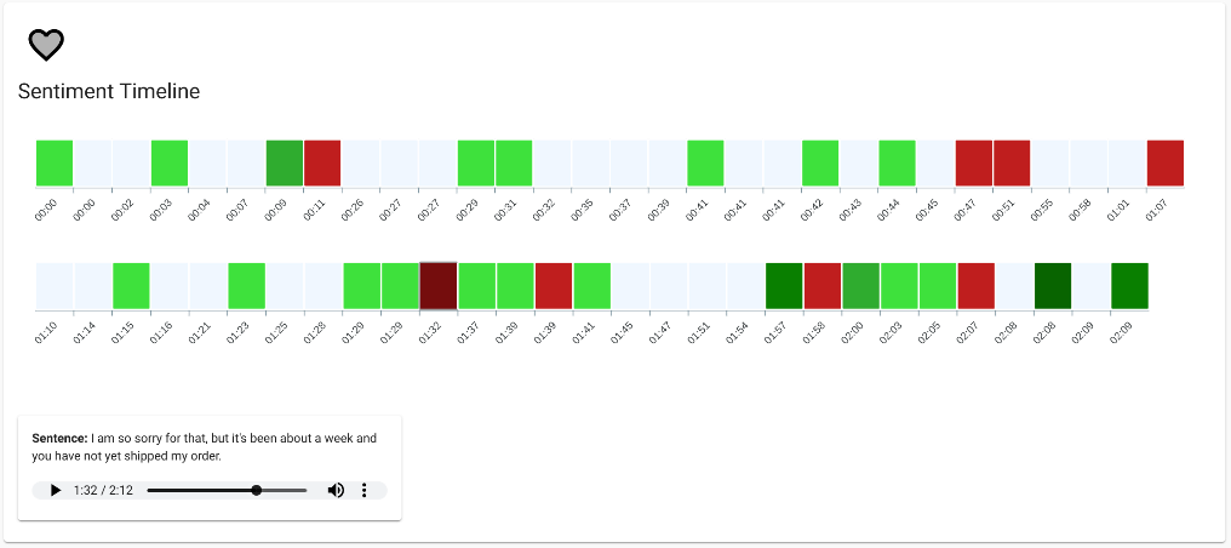

As an example, Google has built Speech Analysis Framework within Google Cloud to transcribe audio, create a data pipeline workflow to display analytics of the transcribed audio files, and visually represent the data.15 Its direct application is for contact centers where millions of calls may be recorded, and it isn’t practical to analyze all the data manually. An automated way of analyzing such audio calls capturing the interactions with customers is critical to help answer operational questions e.g. “Who are our best live agents?” and “Why are customers calling us?”. Figure 1 shows an example of how any call may be summarized using key metrics that involve the length of the call, as well as call sentiment and Figure 2, shows how sentiment fluctuates across the complete timeline of a call, and then drill down into a specific part of the conversation for playback if needed. Companies in multiple industries have already applied advanced analytics on data generated from customer contact centers to reduce average handle time by up to 40 percent, increase self-service containment rates by 5 to 20 percent, cut employee costs by up to $5 million, and boost the conversion rate on service-to-sales calls by nearly 50 percent – all while improving customer satisfaction and employee engagement.16

Figure 1: Summary of a call between a client and an agent

Figure 2: Automated sentiment analysis from a call with drill-down heatmap

One industry leading with potential of voice analytics is Media and Entertainment, and it has already prepared consumers for how reality may be in the future by showcasing AI digital assistants replacing living sentient beings. In the quasi-real fiction movie “Her”, Joaquin Phoenix’s engagement with a voice-enabled AI assistant “Samantha” serves his human need for companionship. This context seems eerily applicable to what humanity is currently facing with COVID-19 driven social distancing and isolation.

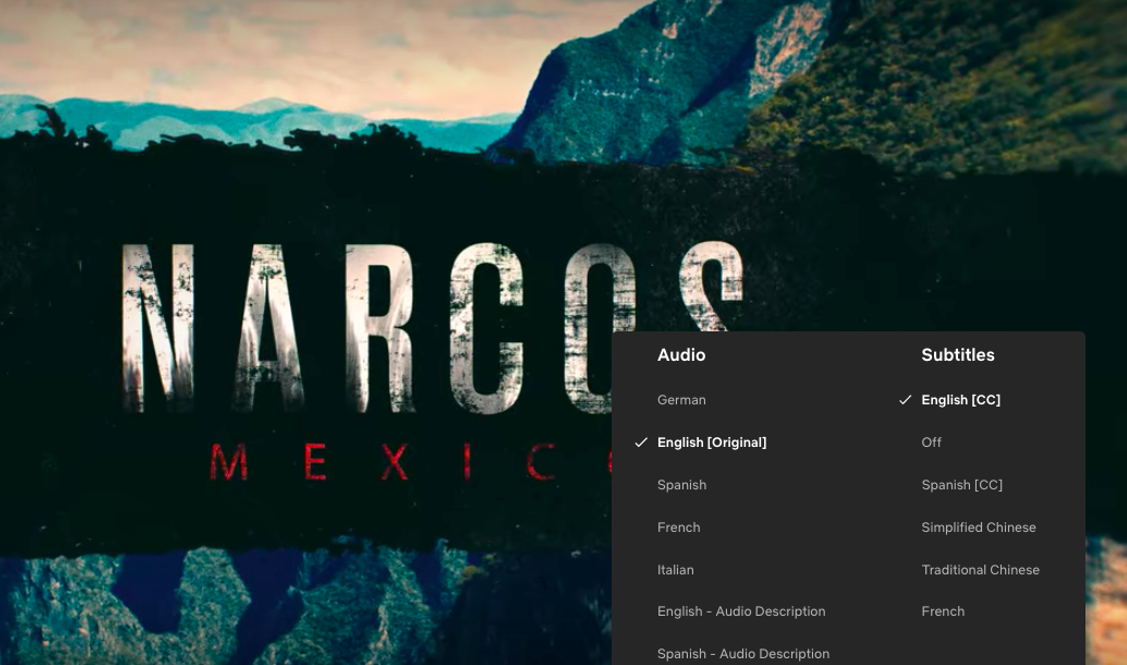

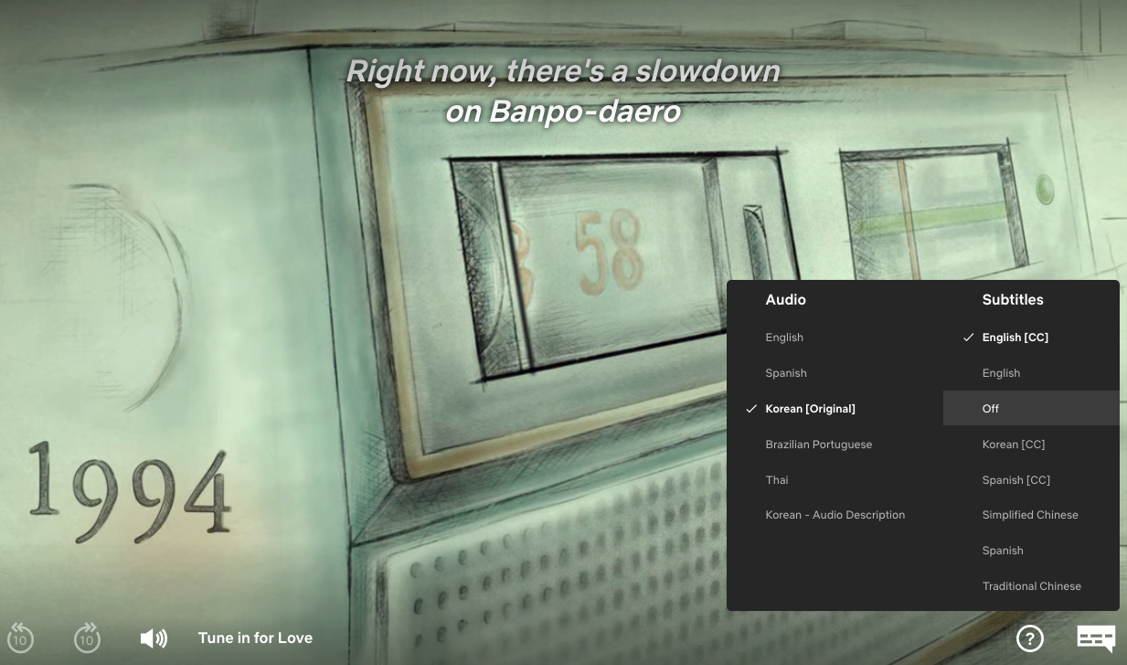

From a technology perspective, AI algorithms form the core of Samantha’s “humanity” as she parses speech, emotions, and intent. These same algorithms are also accelerating the adoption of streaming services. In the COVID-19-driven increase in streaming media consumption, studios are starting to release major movies on direct-to-consumer platforms and this has caused major frictions among stakeholders in the movie industry.17 Investments in content by leading streaming providers Netflix, Amazon Prime, and Disney+ as well as heavily funded start-ups such as HBO Go and Quibi lends itself well to utilizing AI and ML built on top of voice analytics. While the use of streaming devices implies minimal marketing cost to deliver the same content universally, subtitles or language translation are critical to obtaining a global audience. This is where technology solutions (e.g. Google Cloud Speech API and Natural Language API) that can integrate speech recognition technologies for purposes of subtitles, closed-captioning, and other cases where audio has to be transcribed to text is required. Figure 3 shows an example of how Netflix supports multi-language audio for movies and shows creating global audiences. Some companies such as Amazon are well ahead of the curve with others following suit – Amazon Fire TV has inbuilt voice-activated features, which combined with connected TV and use of voice analytics technologies, can gauge sentiment, emotions, and intent towards specific content, artists, and music.

Figure 3: Example of Netflix multi-language audio for shows and movies in US

Academic research has been a fertile ground to bridge NLP, AI, and psycholinguistic data analysis for business applications. As an example, Deborah Estrin, a professor at Cornell Tech, a recipient of 2018 MacArthur “genius” grant, is looking at how podcasts, a portable and on-demand audio content with 67 million monthly listeners in the United States, can be measured for seriousness and energy, and predict their popularity.18 The stakes are large for voice analytics in booming podcast industry as $700 million was advertised on podcasts in 2019 as per estimates by IAB and PWC. Spotify spent $250 million to expand audio content in 2019, Luminary and Apple made inroads in the space, and major Hollywood talent agencies – UTA, William Morris Endeavor, and Creative Artists Agency – have started representing podcasts.19

Similarly, Lyle Ungar at the University of Pennsylvania and his collaborators have been sifting through millions of social posts of video, audio, and text content to identify signal from noise using ML, and solving for mental health use-cases by looking at differences in people’s language structure, or the kinds of words they use, that might indicate a disorder or cognitive decline.20 One of the authors of this note has also done research to build a trend detection SaaS product processing aggregated content for corporate risk and reputation management use-cases, with application for video, audio, and text content, and the work being cited in patents granted to HP for other applications.21 The adverse media screening, also known as negative news screening, allows risk and compliance departments of financial institutions and corporations to interrogate third-party data sources for negative news associated with an individual or company.22 The use of AI and analytics on voice content from phone (including VOIP) and broadcast content, as well as other video and text content types, helps uncover financial crimes, customer risk management and reduce cost of doing business. This is a booming business with projected total cost of financial crime compliance, which includes adverse media screening, reaching $180.9 billion.23

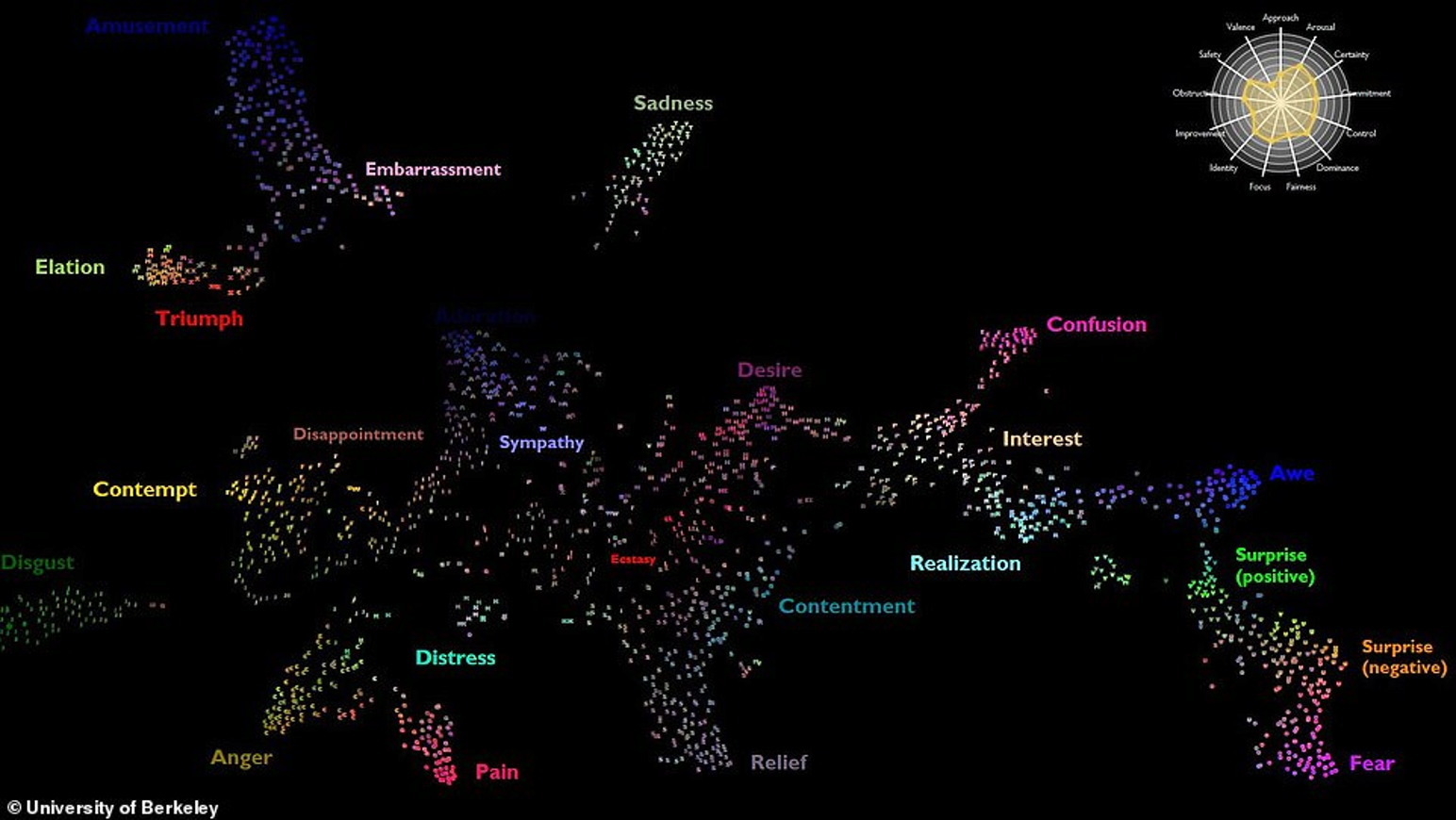

The voice analysis of functional words – the pronouns, articles, prepositions, conjunctions, and auxiliary verbs that are the connective tissue of language – offers deep insights into a person’s honesty, stability, and sense of self and allows generating a sense of their emotional state and personality, and their age and social class.24 Newer techniques are looking into how words are spoken and looking at non-function words e.g. vocal bursts to identify emotion.25 Figure 4 below shows how use of vocal bursts can be used to identify granular emotions that can indicate intent. As a testament to the importance of the link between new technology and consumer behavior, there is a new Technology and Behavioral Science research initiative at The Wharton School that is considering several facets. 26

Technology solutions have to be inclusive to benefit non-representative minorities and often creative solutions arise in challenging circumstances.

Technology solutions have to be inclusive to benefit non-representative minorities and often creative solutions arise in challenging circumstances. In the COVID led pandemic, an eGovernance solution called Aarogya Setu – Interactive Voice Response System (IVRS) has been successful in India, with the system designed to reach out to the population which does not use smartphones, and help them in understanding the symptoms of coronavirus and how to prevent it from spreading.27

Figure 4: Audio map from 2000 nonverbal sound exclamations conveying 24 kinds of emotions

Emerging Trends in Post-COVID world

As the adoption of IoT and voice-enabled technology has become mainstream, we see a post-COVID world of innovation in voice technology products and analytics, and disruption in traditional growth of ecosystem to support it. We layout emerging macro- and micro trends in this space, specifically the emergence of build and buy local products globally; companies solving for “last mile” of language adoption; improved solutions for voice data hogging; investment and marketing of privacy by design; and better Perception AI leading to artificial emotional intelligence becoming real.

1. Build and buy local

The concept of simultaneous occurrence of both universalizing and particularizing tendencies referred to as glocalization,28 will become increasingly applicable to the ecosystems of companies supporting voice-enabled AI technologies. When governments start making regulatory changes to support “build and buy local” to stimulate their economies in a post-COVID world, the “home-market-effect” identified by Nobel laureate Paul Krugman 29 will lead to further concentration of innovation targeted towards local consumers. The Chinese government is pushing Smart voice as one of their four areas for AI development thus leading to innovations by companies including Xiaomi, Baidu, and iFLYTEK. The voice AI technology developed by iFLYTEK has led to an average of 4.5 billion interactions daily.30 Similarly, in India, Reliance Jio has built the largest voice-enabled network with 388 million subscribers since its launch in 2016 and leading the local market in offering voice solutions and an ecosystem of eCommerce built on top of it.31 Yandex is a leading EU internet provider, owns 58% of search market in its native Russia, and since 2017 launch of Alice voice AI assistant has seen 35 million users.32 Interestingly, Alice developers relied on the voice of a Russian actress who also provided the voiceover for Scarlett Johansson’s AI character in the movie “Her” (Figure 5 below).

Figure 5: Joaquim Phoenix has relationship with disembodied voice AI “Samantha” in “Her”

2. The “Last-Mile” for Language Adoption

The voice-enabled technology will expand to cover hundreds of global languages, including local dialects within larger countries – the proverbial “last-mile” for reaching the hardest segment with any network solution. This move can have massive potential as it opens new segments of consumers. With more than 2,000 distinct languages, Africa has a third of the world’s languages (with less than a seventh of the world’s population),33 and India alone has 19,500 language dialects, with 121 languages spoken by 10,000 or more people.34 In the midst of COVID-19, Microsoft has announced that Microsoft Translator with AI and Deep Neural Networks-enhanced will offer real-time translation in five additional Indian languages, bringing the total to ten languages, covering 90% of Indians to access information in their preferred languages.35 The “Universal Translator” first described in Murray Leinster’s classic novel First Contact may well become the reality.36

The secondary benefit of this language adoption is AI and speech-to-text analytics is allowing for endangered languages to be preserved. A traditional challenge for niche languages has been the absence of adequate data set to train AI platforms. The new techniques, technologies, and psycholinguistics allow for some languages, while spoken, not to have as many formal linguistical tools — dictionaries, grammatical materials, or extensive classes for non-native speakers, similar to those for Spanish or Chinese. For example, Rochester Institute of Technology is using deep learning AI to help produce audio and textual documentation of Seneca Indian Nation language spoken fluently by fewer than 50 individuals.37

We caution that speech recognition accuracy requires continual investment in heterogenous representative datasets and newer AI models and technologies. Language dialects affect accuracy and speech recognition performs worse for minorities including women and non-white people. A study has suggested that Google speech recognition has a 78% accuracy rate for Indian English and 53% accuracy rate for Scottish English, and is 13% more accurate for men than it is for women.38

3. Freedom from Voice Data Hogging

Voice and video are data hogs, and the proliferation of IoT devices for consumer and business applications has been constrained by platform infrastructure. Broader adoption of Edge Computing and rollout of 5G networks will dramatically change availability of products as well as adoption of voice-enabled products, as these advancements will lead to the data being generated by voice-enabled IoT devices to be processed at the source itself. A Gartner report indicates that the percentage of enterprise-generated data is created and processed outside a traditional centralized data center or cloud will increase from 10% to 75% by 2022.39 The use of voice analytics will sharply increase as new technologies such as Edge Computing become mainstream and sophisticated ML models running on the edge will synthesize speech in real-time, offering companies to scale and target connected customers.

We also predict that COVID driven demand in virtual communication will serve as forcing function for companies, requiring them to commercialize investment in network and computing capacity with much shorter time-horizon for productization (than traditionally a decade of research), and eventually research-to-productization mirroring Moore’s Law (which posits chip capacity doubling every few years). Facebook recently announced investing in 2Africa project laying 22,900 miles of underwater internet cable connecting Europe, Middle East, and 21 landings in 16 countries in Africa, doubling the continent’s internet capacity.40 Huawei has more than 50 commercial 5G contracts globally and has already been researching 6G network technology, which will be correlated to advancement in computing and chip design.41 Quantum Computing is becoming real, with Google announcing that it had achieved “quantum supremacy” in Oct 2019 and IBM has doubled the computational power of its quantum computers annually for the past four years.42 Quantum Computers will be able to perform machine learning analysis on large and complex datasets of IoT sensors and microphone generated audio and video in a fraction of time.

We caution that productization of quantum computing requires a few fundamental technical challenges to the addressed, though hardware and software majors and many startups are working on it. The initial impact and use-cases in the coming decade may start off in non-voice AI industries e.g. cutting development time for chemicals and pharmaceuticals with simulations; solving optimization problems with unprecedented speed; accelerate autonomous vehicles with quantum AI and transform cybersecurity.43

4. “Privacy by Design” (PbD)

The adoption of voice-enabled technology for common use-cases by consumers at home, in their vehicles, at work, at the store, or in any setting that offers convenience, is contingent on consumers trusting the privacy of their data. Consumer trust is fragile and costly, and news of companies allowing employees to access and review personal consumer voice captured by Amazon Alexa44 and Google Assistant45 devices at homes of people does little to inspire any confidence. Controlled hacking tests by companies and researchers have shown vulnerability of the voice-activated devices, and by inserting hidden audio signals to secretly making the devices dial phone numbers or open malicious Websites.46

We note that going forward, as companies rely on first-party data relationships, earning consumer trust will become paramount. We foresee companies gaining competitive advantage by taking lead in engendering trust and incorporating Privacy by Design47 to ensure that personally identifiable information (PII) in systems, processes, and products is protected. The glocalization trend implies that patchwork of consumer privacy laws, e.g. GDPR in EU, CCPA in US, LGPD in Brazil, NDPR in Nigeria, etc. will remain and not be uniformly adopted. This disparity in laws will be an opportunity for companies to lead by example and earn consumer trust by directly promoting ideas like “PbD Inside” to consumers – analogous to the “Intel Inside” strategy, which turned a hidden internal chip into a brand, and that brand into billions in added sales and consumer trust for Intel.48

5. Artificial Emotional Intelligence (AEI) becomes Real

Consumer adoption and engagement with voice-enabled technologies are social interaction that elicits emotions. We foresee advances in Artificial Emotional Intelligence becoming mainstream and allowing for more nuanced reactions to human emotions. With the voice medium becoming a natural way for humans to interact (or search), it will lead to improvements in measuring intent from voice recognition and voice analytics – this is similar to how text-based search intent continues to improve with advances in neural network-based ML techniques for natural language processing (NLP).49 Affective computing market is estimated to grow to $41 billion by 2022, and “emotional inputs will create a shift from data-driven IQ-heavy interactions to deep EQ-guided experiences, giving brands the opportunity to connect to customers on a much deeper, more personal level”.50 The accuracy of emotion detection will improve significantly and we expect AEI will combine voice with visual, biometric sensors, and other data to power emotional AI applications that will offer better experiences, better design, and better customer service for companies and their customers.51

We have showcased why Voice AI and analytics matters, its impact, risks, and possible future directions. Voice AI can offer many advantages to society and business but requires careful attention to the associated risks. More broadly, Perception AI, which covers the gamut of sensory inputs including vision, smell, and touch in addition to voice, can lead to more humanized technology that brings dramatic changes in how businesses and consumers interact with products. Voice AI is the tip of the spear of Perception AI!

Saurabh Goorha is a Senior Fellow at AI and Analytics for Business and CEO of AffectPercept, a Perception AI advisory and analytics firm. Raghuram Iyengar is Miers-Busch W’1885 Professor and Professor of Marketing at the Wharton School and Faculty Director of AI and Analytics for Business.

Related Articles

3 Strategies for the Future of Voice-Enabled AI

Experts from AI and Analytics for Business weigh in on what’s ahead for this rapidly-growing technology.

Read more >>